The MeiTY Advisory on "Approving AI Platforms" and its Far-Reaching Issues

From the ISAIL Secretariat

On March 1, the Ministry of Electronics and Information Technology (MeitY) issued an advisory to all digital platforms, emphasising the importance of ensuring that Artificial Intelligence (AI) technologies do not compromise the integrity of the electoral process. This directive, although not legally binding, underscores the government's concern over the potential influence of AI on democratic practices.

The Indian Society of Artificial Intelligence and Law acknowledges the proactive steps taken by MeitY to address this issue. However, we believe that for such advisories to be truly effective, they must be made publicly accessible.

Transparency in these matters not only fosters trust but also ensures a broader understanding and compliance across the spectrum of stakeholders involved, including startups and MSMEs which form a significant part of our burgeoning AI industry.

It is pertinent to note that the advisory's non-binding nature, as reported by the Economic Times, may not compel platforms to take the necessary precautions, thereby rendering it a piecemeal approach to a complex challenge. Furthermore, the absence of the advisory on official platforms such as the Press Information Bureau (PIB) or the MeitY website raises questions about its accessibility and the government's commitment to open communication.

The Indian AI industry, particularly startups and MSMEs, already faces significant challenges, including high operational costs and limited access to computational resources. In this context, advisories that are not accompanied by practical support or guidelines can exacerbate the sense of uncertainty and hinder innovation.

We could access a copy of their Advisory, which can be accessed here.

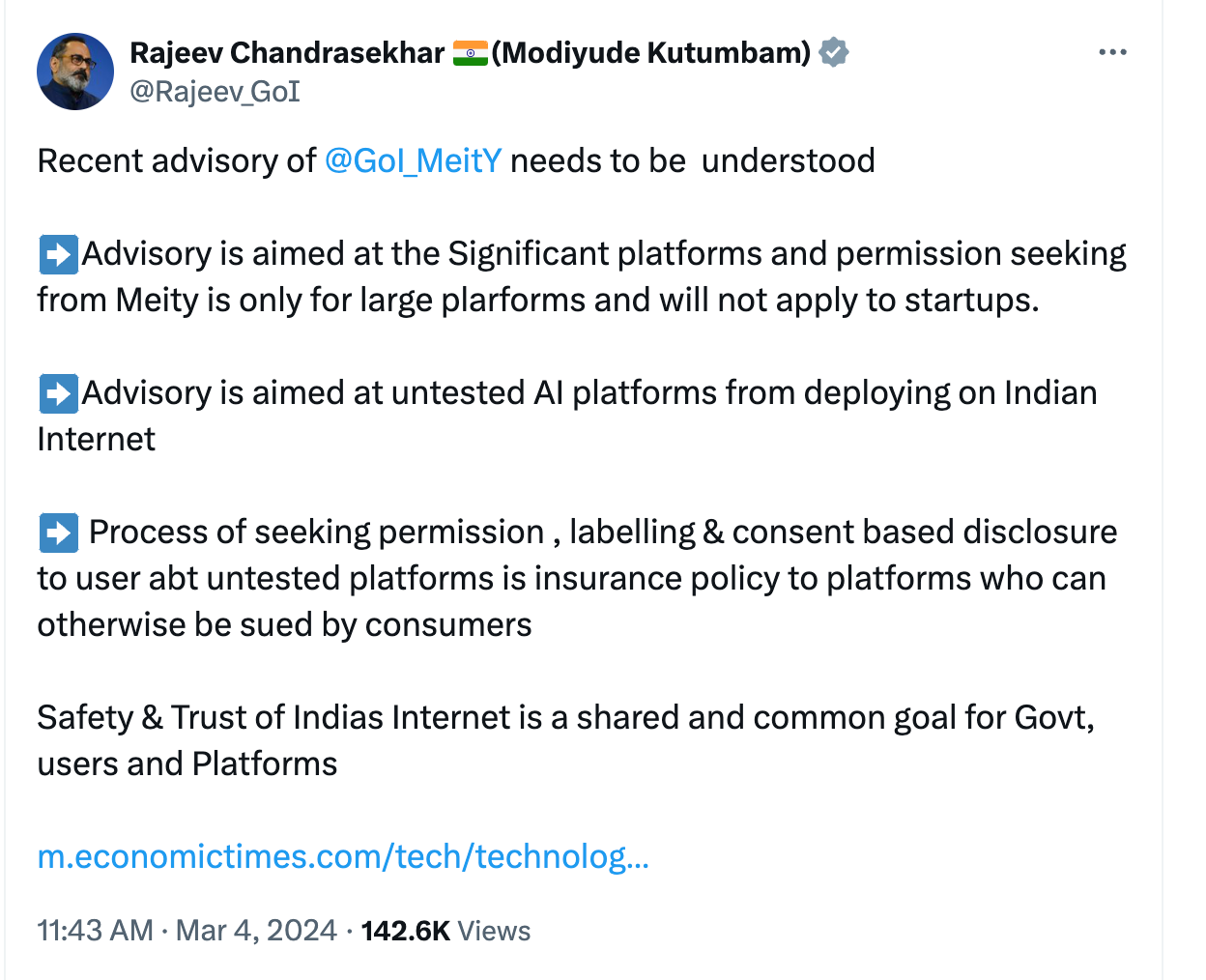

Now, as the update on this advisory was reported by Economic Times referring to “all platforms”, the Minister of State of Electronics and Information Technology clarifies in a post on X that the advisory is aimed at “Significant Platforms”. This is a major error.

We believe this was a clear issue of poor policy communication. We however acknowledge the fact that the government assumes that for significant platforms, preferably MNCs, the compliance to enable disclosures could be used as a form of insurance policy to platforms, who “can otherwise by sued by consumers”.

You can read the post of the MoS at https://twitter.com/Rajeev_GoI/status/1764534565715300592

Here is some analysis of the Advisory from Abhivardhan, our Chairperson, who is also the Managing Partner of Indic Pacific Legal Research.

Understanding the Advisory

The advisory issued by the Ministry of Electronics and Information Technology (MeitY) on March 1, 2024, outlines several directives for intermediaries and platforms regarding the use of Artificial Intelligence (AI) models, software, and algorithms. While the intent to safeguard the integrity of the electoral process and prevent misuse of AI is commendable, there are several points that warrant a critical analysis:

Lack of Binding Power: The advisory is described as non-binding, which may limit its effectiveness. Without legal enforceability, platforms and intermediaries may not feel compelled to adhere to the guidelines, potentially undermining the advisory's purpose.

Transparency and Public Access: The advisory has not been made publicly available on platforms such as the Press Information Bureau (PIB) or the MeitY website. This lack of transparency can lead to confusion and inconsistency in compliance among stakeholders.

Resource Accessibility for MSMEs and Startups: The advisory does not address the challenges faced by MSMEs and startups, particularly in terms of access to computational resources and the high costs associated with AI development. Without considering these factors, the advisory may inadvertently disadvantage smaller entities in the industry.

Clarity on 'Explicit Permission' from the Government: The requirement for explicit permission from the Government of India to use under-testing or unreliable AI models is vague. It is unclear how this process would be managed, what criteria would be used for granting permission, and how it would impact innovation and time-to-market for AI solutions.

User Consent and Awareness: While the advisory suggests a 'consent popup' mechanism to inform users about the fallibility of AI outputs, it does not provide guidance on how this should be implemented. There is also a risk that such popups could become just another click-through formality, ignored by users.

Labeling of Synthetic Content: The directive to label or embed metadata in synthetic content to identify its origin is a positive step towards transparency. However, the advisory does not specify the technical standards or methods for implementing such labels, which could lead to inconsistent practices.

Potential Penal Consequences: The advisory mentions penal consequences for non-compliance but does not elaborate on what these might entail. A more detailed explanation of the penalties and the process for determining violations would be beneficial for all parties involved.

Reporting and Compliance: The requirement for intermediaries to submit an Action Taken-cum-Status Report within 15 days may be challenging, especially for smaller platforms. The advisory should consider providing a more realistic timeline and support for compliance reporting.

Update #1

Update #2

As we have accessed a new version of the text, it seems the ministry has removed the section of the advisory which had required all AI platforms to get government approval. However, the arbitrary sections of the advisory still remain, and the legality of the advisory might be questionable.

Call to AI and Tech Start-ups

In case any tech start-up or SME feels to be affected by the terms of the advisory, they may write their concerns and submit it to the Secretariat of the Indian Society of Artificial Intelligence and Law at executive@isail.co.in and we will get back to you.

You can also read other analyses and works developed by Indic Pacific Legal Research here.