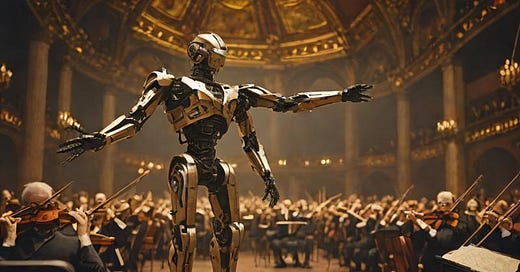

EU AI Act: Navigating the Regulatory Tango - Striking the Right Balance Between Innovation and Safety

From the Advisory Council

This article is authored by Dr Cristina Vanberghen, one of the Distinguished Experts of the ISAIL Advisory Council. Dr Vanberghen is a Senior Expert at the European Commission.

The European Union's recently agreed-upon Artificial Intelligence Act has sparked a debate, with French President Emmanuel Macron criticizing the stringent regulations imposed on foundational models, the underlying technology of generative AI like OpenAI's ChatGPT. Macron highlighted France's standing in AI, suggesting it rivals the UK but lags behind China and the US, both of which have opted for less restrictive regulatory approaches.

While some argue the regulations will enhance digital ethics, others fear they could divert resources from AI innovation to compliance and legal enforcement. European Commission President Ursula von der Leyen, however, defended the AI Act for aligning with European values in the digital age.

The AI Act focuses on safety for generative AI, including frameworks for the responsible deployment of algorithmic models. Regulation is not seen as an impediment to innovation but rather a facilitator for long-term progress. Without prioritizing safety first, trust is eroded, hindering innovation itself. Instead, regulation can cultivate a culture of safety by design in an AI-integrated society where extensive data collection and digital product development are primarily driven by private entities and where leading AI developers hold sway over the global economy.

Regulation does not stifle innovation but rather can encourage industry to adopt a higher level of trustworthiness and develop better AI. This is particularly important in instances where algorithmic models lack robustness or pose ethical concerns.

Implementing regulation is an ongoing process, involving both horizontal regulation like the EU AI Act and softer, more flexible measures that adapt to the dynamic nature of AI. The ultimate goal is to foster a safe ecosystem for companies and users within their national borders. While the definition of AI evolves, and concerns arise about the feasibility of international regulatory harmonization, should we lower the bar in the name of competitive markets, potentially compromising consumer protection?

I agree that while we navigate the complexities of AI regulation, it's crucial to strike a delicate balance between innovation and safety. Companies often struggle to comply with regulatory frameworks due to the absence of streamlined processes, compliance tools, and a global approach. The EU AI Act empowers governments to stay vigilant, monitor AI's opportunities and risks, and make timely adjustments to regulatory frameworks. EU institutions and national governments bear a heavy responsibility to ensure effective implementation of the EU AI Act, upholding a human-centric approach to AI.

What we need is a regulatory environment that encourages responsible AI development while protecting users and society from potential harms. Only by striking this balance can we harness the transformative power of AI while upholding human values and ethical principles. The EU AI Act is the right song for the future. So please, don't stop the music!